By the year 2020 customer experience will overtake price and product as the key brand differentiator.

In terms of customer experience, human to human interaction has always been a primary driver of brand loyalty. But as more companies embrace digital transformation there is an increasingly bigger focus on customer experience's digital offshoot—user experience. And it's worth the investment: Forrester research calculates that the revenue impact from a 10% point improvement in a company’s customer experience score can translate into more than $1 Billion.

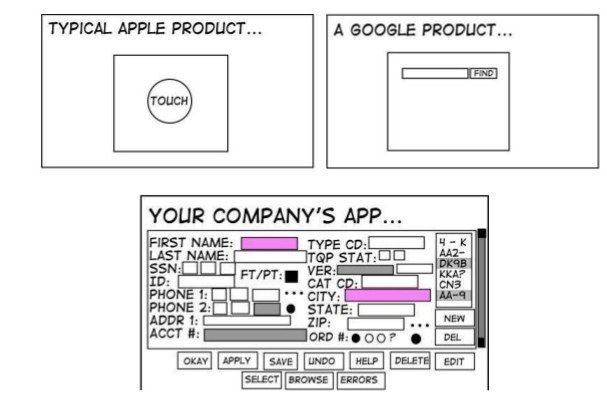

Poor human-machine interaction translates into poor user and customer experience.

As a digital provider it's up to us to enable easy communication between man and machine. We know that for an application or website to be intuitive, number one priority is design. But what if the demands of the product are so detailed that you can't rely on design alone to deliver a superior user interface experience?

According to a recent study published by Accenture, artificial intelligence (AI) is the new user Interface (UI). Even the most famous example of a simplistic user friendly interface, Google, is turning to machine learning to improve customer experience.

I would like to share some research I have been doing on how to improve a user interface experience using artificial intelligence.

Using Artificial Intelligence to Benefit Users - An Exploration

Recently we have been working on a prototype for users to look up personal contact information using a diverse range of search criteria. For the sake of this article let's say it's a web application that enables recruiters to find potential employees in an HR agency database.

Such information is typically retrieved from databases using Structured Query Language (SQL), something our developers might be fluent in but the majority of users are not.

The most obvious design option was a filter-based interface to create a SQL query and retrieve the stored information. However the search criteria was so diverse and the data set so big that this interface would need to contain more than 90 filter options. A tedious process that no user deserves or expects in 2017.

We also had to take into account that the app was not likely to be used on a daily basis, which was all the more reason to make the user experience extra user-friendly. So we decided to create an interface where the user could query the database using standard English language.

As part of my research I explored different examples of smart search syntax we could use for the interface. I found 2 pertinent possibilities for expressing a search query in a human-like way:

Option 1

- Electrician, with a car, <10 km from Fribourg

- Watchmaker, meticulous, 2 to 5 years experience

- Electronic engineer, embedded systems, C++ and Java, analytical skills

- Manager, medical, ENTP, empathy and communication skills

| Pros | Cons | |

| User needs to be taught how to write a query (albeit easy to repeat once mastered) | |

|

Option 2

- Electrician with a car living in a range of 10 km from Fribourg

- A meticulous watchmaker that has 2 to 5 years of experience

- An Electronic engineer specialized in embedded systems, with an experience in C++ and Java, and with good analytical skills as well.

- Manager in the medical field, with a ENTP personality, with empathy and communication skills

| Pros | Cons | |

Allows more complex information to be provided. For the example sentence above, this would require parenthesis | User needs to think about how to structure the information according to the rules of anatural language | |

Could be less ambiguous from a user perspective

|

|

We estimated that both options could offer acceptable user experience - if, for option 2, we could guarantee the chance of misinterpretation would be low. But simple was the name of the exercise, so ultimately the lower complexity of option 1 made us decide to pursue it further.

At this point, it was clear for us that we needed a natural language processing tool to transform the "natural" user input into a SQL request (or something close enough). It was then I started to explore the exciting new world for us that is Natural Language Processing.

What exactly is Natural Language Processing?

Definition: Natural language processing (NLP) is a component of artificial intelligence, concerned with the programming of computers so that they can process human (natural) language. The goal of natural language processing is to aid interaction between computer and human despite language differences.

The complexity of a NLP tool can be determined with 2 parameters:

- Breadth (of understanding) : Size of vocabulary and grammar.

- Depth (of understanding) : Degree to which its understanding approximate that of a fluent native speaker.

This means that there are 4 possible categories for a NLP tool, as described in the table below:

| Narrow | Broad | |

| Shallow These Language interpreters require minimal complexity, but have a small range of applications | Systems that attempt to understand the contents of a document such as a news release beyond simple keyword matching and to judge its suitability for a user. | |

| Deep Systems that explore and model mechanisms of understanding, but they still have limited application | Beyond the current state of art. |

For our purpose, the processing tool's vocabulary was related to personal details (e.g. location, age, gender), personal skills (communication, analytical) and professional capacities (previous positions, technical skills). Therefore a narrow natural processing tool was a good option for our needs.

Going back to my explorations with smart syntax. For the first option, using a condensed human-like syntax, the keywords are already well structured by the user with commas. So the processing tool doesn't need to understand the structure of a whole sentence, but only simple parts of the text. Therefore this option requires a narrow-shallow processing tool.

If we had chosen the second option instead, which uses an ordinary human syntax, the keywords would need to be extracted from a natural language sentence. This requires a narrow-deep processing tool.

Which Narrow Natural Language Processing Tool to use for our Project?

Our goal was to convert a query natural language to an SQL query. I quickly discovered a specfic category of NLP tools called 'Natural Language Interface for Database' (NLIDB), which receive a natural language query and generate a query to a database as a result. Bingo. That was exactly what we needed! OK, then we just needed to find a NLIDB tool suitable for our project, right? Not so fast...

Problem 1: Most NLIDB tools are in a abandoned state. They were created by students researching NLIDB techniques and once they published their paper, they quickly stopped maintaining their tool.

Problem 2: Most of these tools expect a question as input which doesn't match our chosen syntax, so we would need a workaround

The sheer amount of tools also posed a challenge, but after some internet trawling I gathered a list of candidate tools that could still be suitable for our purpose.

My selection criteria was that the tool must be able to translate a query like: Mechanic, agricultural machinery, autonomous, with a car into a SQL query (approximately) like this: SELECT id FROM candidats_table WHERE profession="mechanic" AND specialisation="agricultural machinery" AND personal_skills = "autonomous" AND has_a_car="true"

Here is a sample of some of those I looked into:

| Quepy |

| ||

| FriendlyData | This is a NLIDB commercial tool that looked like a good fit for our project. It seemed more flexible in regards to the natural query syntax than any of the other tools we investigated. It was definitely a tool to keep on our radar. | ||

| FriendlyData | This is a well maintained and documented python framework for doing NLP. It has the ability to do the translation to SQL queries, as long as a grammar file is provided. |

ElasticSearch

At this point, when I was presenting the results of my search to my team, one of them suggested that ElasticSearch could meet the criteria and be a good option. Elasticsearch is a highly scalable open-source full-text search and analytics engine. It allows you to store, search, and analyze big volumes of data quickly and in near real time. It is generally used as the underlying engine/technology that powers applications that have complex search features and requirements. In short:

- ElasticSearch has user-friendly and natural search

- If there will be huge amount of data, and speed is the deal breaker, ElasticSearch is the way to go

- ElasticSearch has its own trick to store related data together - as mentioned in Handling Relationships

In order to use this tool, we still need to configure it to teach how to access the database, like if it recognizes a search for a secretary with accounting skills, we need to tell it which of the database tables corresponds to secretaries, and which field corresponds to accounting skills.

Prototype Implementation Time!

So after all that theory, evaluation and now that we knew which tool to use, it was time to start the implementation of a prototype.

As we got to work, the team soon evaluated our selected approach for a human/machine search as 9-difficulty on a scale of 1-10. We decided that for a first prototype it made sense to use a simplified approach that doesn't involve any existing NLP tools. Instead, we used the knowledge gained in the research to conceptualize our own narrow-shallow NLP tool.

This tool uses a correspondence table to translate a given amount of possible expressions into SQL queries. Thanks to the way information is structured using our chosen comma-separated syntax, there is a reasonably limited number of ways to express the same information (e.g. "with a car" = "with car" = "has a car" = "car owner"). This approach - with a few additions such as OR and range special keywords - therefore fully met our requirements.

Behold our final filter-free interface!

Once a user inputs their query and hits "let's go", they are presented with an automatically generated filter-view of their selection criteria, which they can easily adjust if needed.

So thanks to our NLP tool we will be able to achieve our goal of using Natural Language Processing to create a super-simple user-friendly interface. And after we implement data analytics that capture every time a user types a non-supported expression, the capabilities of the tool will continually improve as it is used. Using this same theory, we would also be able to predict and handle user misspellings and increase the probability of a successful first time search.

Conclusion

After researching and starting to practically apply the theory I can make conclusions on various levels:

- Technical:

- When an application requires narrow-shallow natural language processing - ie. the ways of expressing the same information are predictable and limited - we should probably consider creating our own solution instead of a tool designed for more complex applications.

- Company level:

- We didn't end up with a super techy and hyped solution, but the best solution for an application is always the simplest possible solution that is not too simple. Using Natural Language Processing we will be able to create a product that gives a much better user experience than a more standard solution, while minimizing complexity. This is not a perfect human-machine interaction, but still a move forward in terms of non-standard approaches. That said, as the product evolves, we may come to a point that using a more complex NLP tool and approach will be beneficial.

- We found out that NLP is a very interesting world, with a lot of potential for the future. Advanced NLP techniques were overkill for this project, but we can almost take it for granted that someday we will need to work on a project where these techniques will be the right choice.

Interested in more innovative topics like Artificial Intelligence? For more in-depth studies and insights into our explorations with new techniques and technologies, sign up for our quarterly newsletter.

Looking for a partner who uses cutting edge concepts to connect users with your products? Talk to us today!